mathematical intuition for transformers

Transformers have been the major architecture driving huge advances in the field of deep learning and have also been found to effectively scale to billions of parameters. In this post, I try to mathematically break the down the core concepts of the transformers architecture like self-attention, residual stream and flow of inputs through the layers.

high-level overview

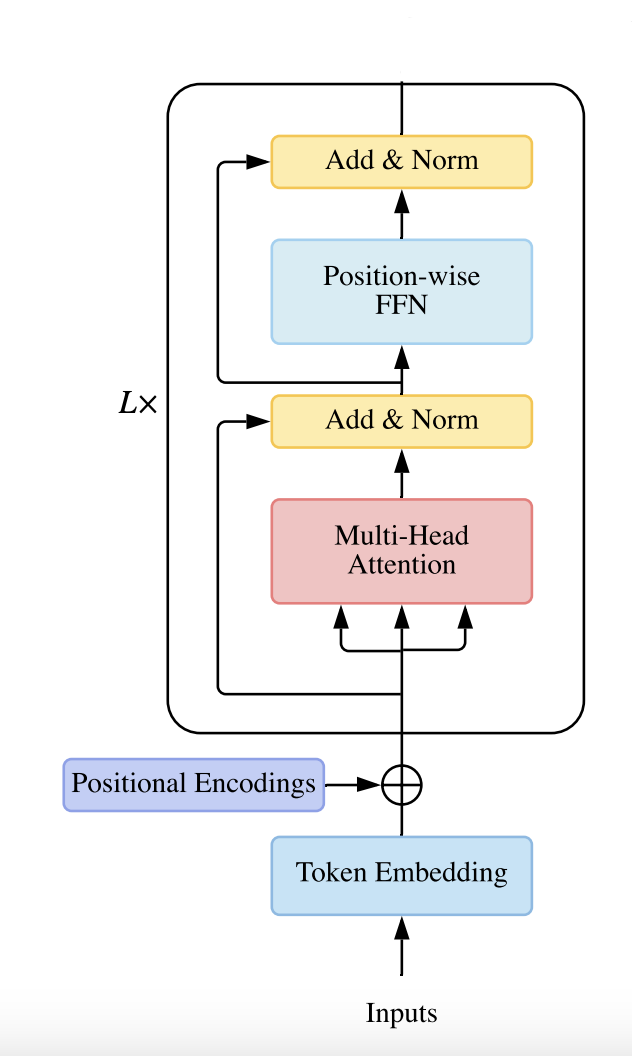

- A transformer starts with a token embedding (representing a token by a dimensional vector), followed by a series of "residual" blocks and finally a token unembedding. Each residual block consists of an attention layer followed by an MLP layer. Both the attention and MLP layers "read" their input from the residual stream and then "write" their result to the residual stream by adding a linear projection back in. Each attention layer consists of multiple heads which operate in parallel.

- The self attention along with MLP layer is called a "residual block". The residual stream refers to the flow of token representations as they pass through the layers of the transformer. Instead of re-writing the input completely with new information/representation, each layer just reads from the stream, processes it and then writes additively back into the stream which allows for information to be preserved across layers.

- The input to any residual block is token embedding or the output of the previous residual block. Each layer in the residual block takes the current token embedding from the residual stream as input. After processing the token embeddings, the output of each layer (attention or MLP) is "written back" to the residual stream. The attention layer processes the token embeddings and the output of its layer to the residual stream and then that output becomes input to the next (MLP) layer. The MLP layer also reads the new input from the previous layer from the residual stream, processes it and adds/"write" it back to the stream. This doesn't completely replace the original input but is done additively to maintain original token representation while also incorporating new information learned by the attention or MLP layer. The processed output is added to the original input. Information is always feedforward.

- A LayerNorm is applied to the input before the attention or MLP layer to stabilize training by normalizing the inputs activations (a mean of 0 and a variance of 1)

- Mathematically, for a residual block with LayerNorm before attention & MLP layers with input x,

- Normalizing the input activations,

- The output from the attention layer is added/"written" back to the residual stream,

- LayerNorm is applied to the above output before it goes into the MLP,

- The MLP processes the normalized input and the output is again added back to the residual stream which is the output of a residual block,

- Note that a Linear projection transforms the input by multiplying it with a weight matrix. It maps input from one space to another, changing the dimensionality or transforming its representation.

where :

is the input (or token embedding), is the learnable weight matrix and the optional bias term.

- The layer is a fully connected feed-forward network which consists of two linear transformations with a activation in between.

- Both the attention and MLP layers each “read” their input from the residual stream by performing a linear projection means that the the input token embeddings are linearly transformed before being used for the attention mechanism or MLP's feed-forward processing.

- The token embedding X is projected into queries, keys and values matrices using linear projection,

- The attention mechanism then computes the attention scores and applies them to values V,

- The output of attention mechanism can be thought of as a weighted sum of the values.

- a residual block of a transformer,

self-attention

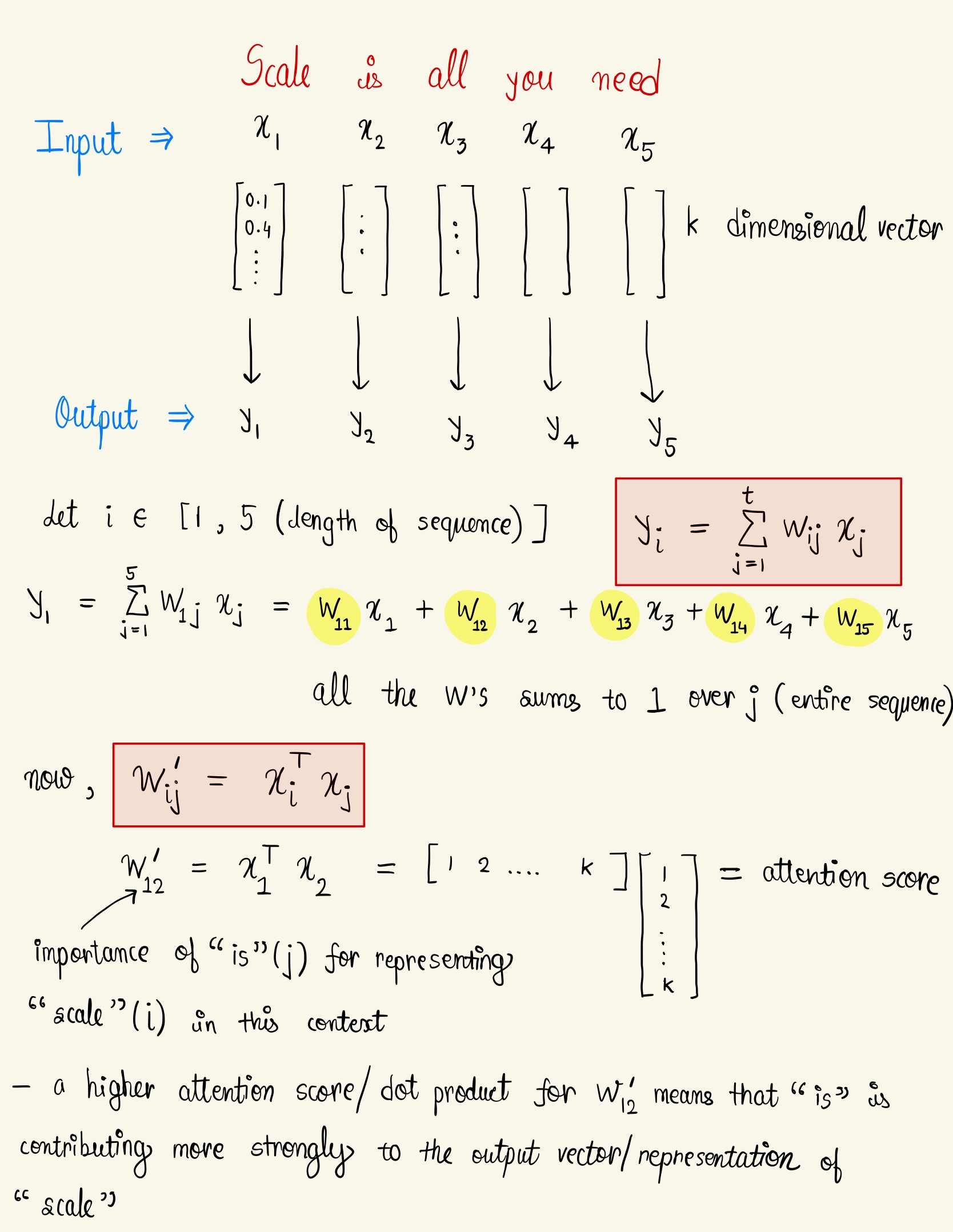

- self-attention is a sequence to sequence operation : a sequence of vector goes in and a sequence of vector comes out. Let's call the input vectors and the output vectors The vectors all have dimension .

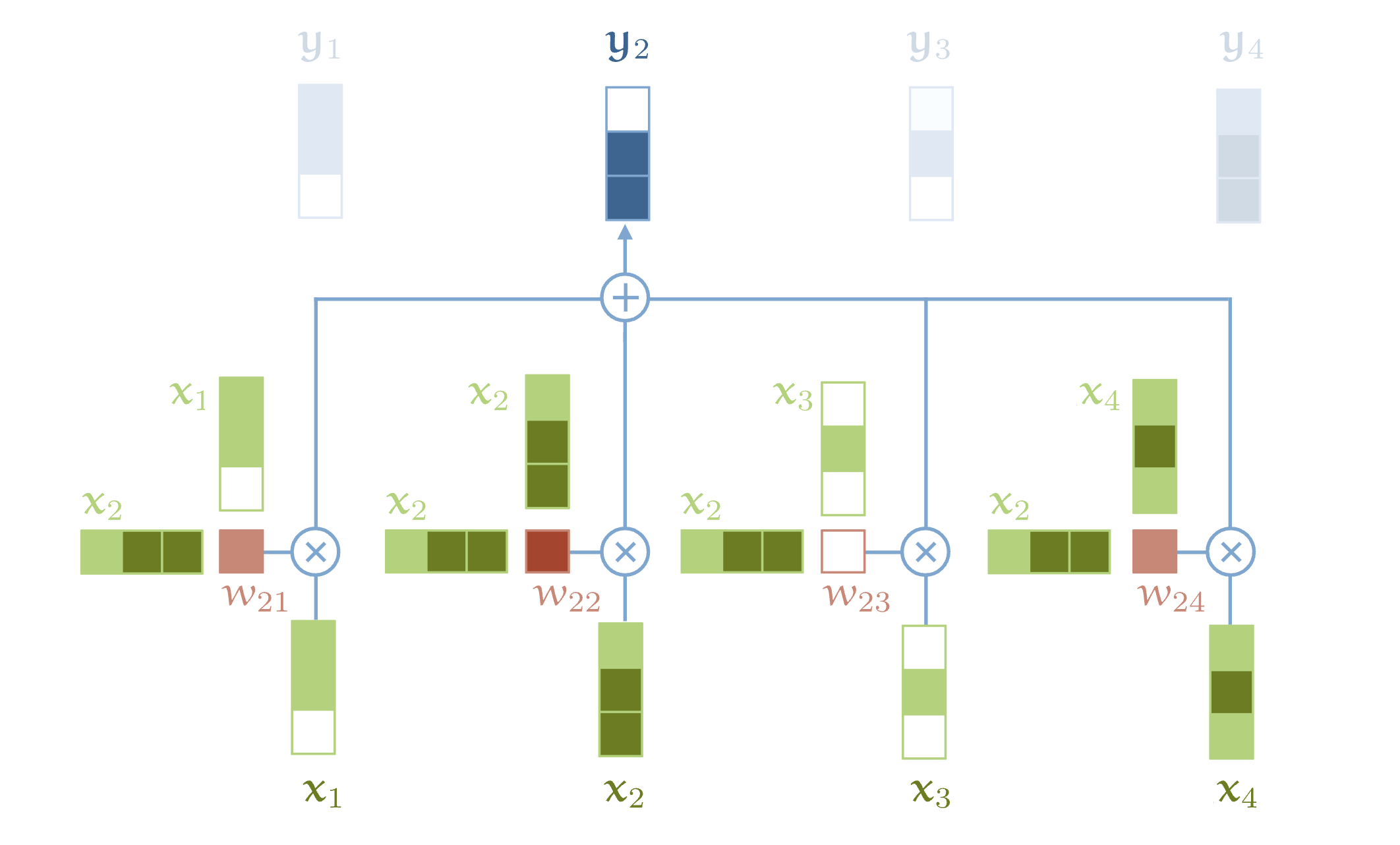

- To produce output vector , the self attention operation takes a weighted average over all the input vectors

where

indexes over all the whole sequence and the weights sum to one over all .

- The weight is not a fixed parameter, but is derived from a function over and which is a dot product. It represents the strength of relationship between position and in the sequence and represents the raw "attention-scores".

is the input vector at the same position as the current output vector . For the next output vector, you get an entirely new series of dot products, and a different weighted sum.

- the output vector is a new representation of that takes into account its relationship with all the other words in the input sentence.

- the dot product expresses how related two vectors in the input sequence are. It can also be thought of as a measure of similarity or "alignment" between the two vectors and . A higher dot product means that the vectors are more aligned meaning high relevance.

- A softmax function is applied to the dot product to map the values between and to ensure that they sum to 1 over the whole sequence.

- Note that the values in the embedding weight matrix are learned during training and the dimension of the embedding weight matrix is , where each row represents the embedding vector for a token in the vocabulary. How "related" two tokens are is entirely determined by the task that you're learning/ training for.

- Self-attention treats its inputs as a set of elements rather than an ordered sequence meaning it doesn't inherently consider the order in which elements appear. It is permutation equivariant meaning that if you shuffle the inputs elements, the output elements will also be shuffles in the exact order.

- Every input vector is used in three different ways in the self-attention operation :

- it is compared to every other vector to calculate the weights for its own output .

- it is compared to every other vector to calculate the weights for the output of the vector .

- it is used as a part of the weighted sum to compute each output vector once the weights have been calculated.

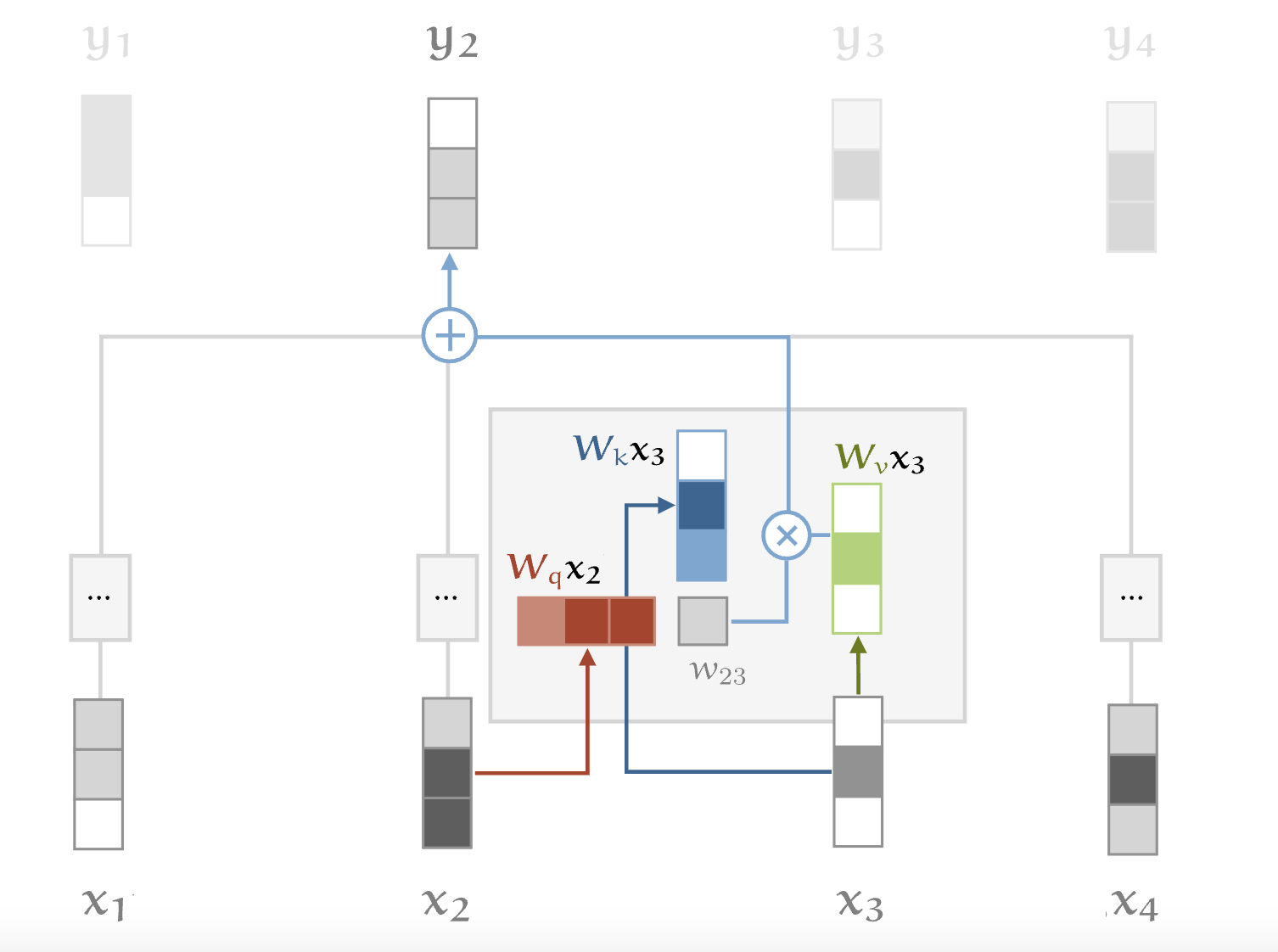

- we compute three linear transformations for the original input vector by multiplying it with three learned matrices that transform the input into specialized representations for three different roles in self-attention :

- The query vector, represents what the input vector is looking for in other input vectors and is used to to compute attention scores with all other input vectors. It basically consists information about what to look for in other input tokens.

- The key vector, represents how presents itself to other input vectors that is the relevance or importance of to other vectors. It tells you what does it itself represent and how it'll contribute to other input token's representation.

- The value vector, is used to compute the output vector as a part of the weighted sum. It is basically a representation of that is actually used to compute the output vector of in the weighted sum.

- the dot-product helps the tokens to "attend" to other tokens that is how relevant is each token to every other token. The output is calculated by a weighted sum of the attention scores with the value vector.

- In practice, we compute the attention function on a set of queries simultaneously, packed together into a matrix . The keys and values are also packed together into matrices and .

Scaled dot-product

- The softmax function is very sensitive to large input values as the exponential growth is very rapid for positive values and almost zero for negative values which kills the gradient and slows down training. If the dimension of the embedding vector is , then imagine a vector in with values all . Its euclidean length is . So it helps to scale the dot product back by the amount by which the increase in dimension increases the length of average vectors and stop the inputs to softmax from growing too large.

Multi-head attention

- In a single self-attention operation, all the contextual information gets combined together.

- By applying several self-attention mechanisms in parallel, each with their own key, query and value matrices we can capture diverse relationships between the input tokens and their influence in different ways. These are called attention heads.

- In multi-head attention, each head receives low-dimensional keys, queries and values. If the input vector has dimensions and we have attention heads, then to project them down to a 64 dimensional vector (since the input vectors which is 256 dimensional vectors are multiplied by a weight matrices). While projection cannot perfectly preserve all the information from the higher dimensional space, the 4 different heads focus on different aspects of the input.

- Multi-head attention allows the model to jointly attend to information (i,e, focus on different aspects of the input simultaneously) from different representation subspaces (since each head is projected to a different lower dimensional space) at different positions (different parts of the input sentence).

- The outputs from all attention heads are then concatenated combining all the information from different subspaces that each head has focused on (bringing the lower dimensional back up to ). Then the combined output is projected using the weight matrix, where which serves to transform the concatenated output into the appropriate dimensionality for the next layer and since its a learnable matrix it allows the model to learn how to best combine the information from different heads and capture more complex relationships between the features extracted by different heads.

Positional Encoding

- Since self-attention is permutation invariant, positional encodings help encode information about the relative or absolute position of the tokens in the sequence. The positional encodings have the same dimension as the embeddings, so that the two can be summed. They're not learned unlike word embeddings but generated by a predefined function.

- The function f : maps position to dimensional vector. A sine or cosine function of different frequencies can be used :

- where is the position in the sequence and and represents even and odd dimensions (indices) in the vector.

- The sinusoidal function allows the model to extrapolate to sequence lengths longer than the ones encountered during training. For any fixed offset , can be represented as a linear function of . This property helps the model to easily learn to attend by relative position rather than absolute position and helps the attention learn a general pattern of "attend to k steps away" rather than learn for each absolute position pair.

References

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017, June 12). Attention is all you need. arXiv.org. https://arxiv.org/abs/1706.03762

- Transformers from scratch | peterbloem.nl. (n.d.). https://peterbloem.nl/blog/transformers